Intuitive Explanation of BERT- Bidirectional Transformers for NLP | by Renu Khandelwal | Towards Data Science

![PDF] Fine-Tuning Bidirectional Encoder Representations From Transformers (BERT)–Based Models on Large-Scale Electronic Health Record Notes: An Empirical Study | Semantic Scholar PDF] Fine-Tuning Bidirectional Encoder Representations From Transformers (BERT)–Based Models on Large-Scale Electronic Health Record Notes: An Empirical Study | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/5d115b20965fd5f98d47086ccb12b345cc99c18e/3-Figure1-1.png)

PDF] Fine-Tuning Bidirectional Encoder Representations From Transformers (BERT)–Based Models on Large-Scale Electronic Health Record Notes: An Empirical Study | Semantic Scholar

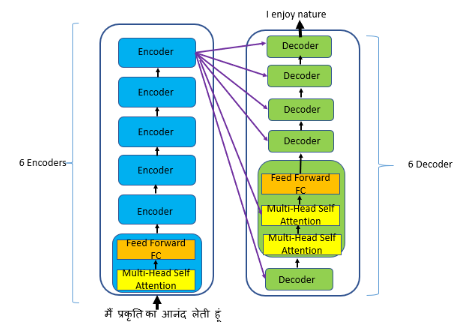

BERT — Bidirectional Encoder Representation from Transformers: Pioneering Wonderful Large-Scale Pre-Trained Language Model Boom - KiKaBeN

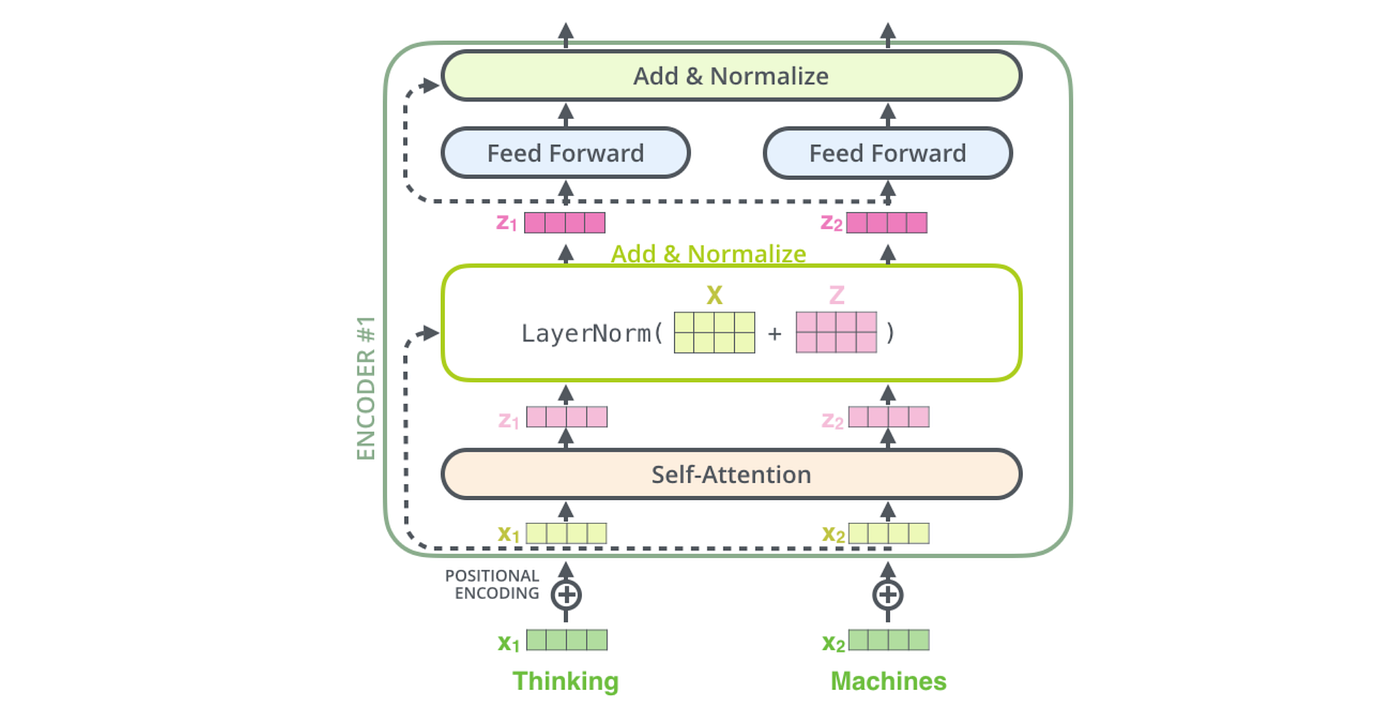

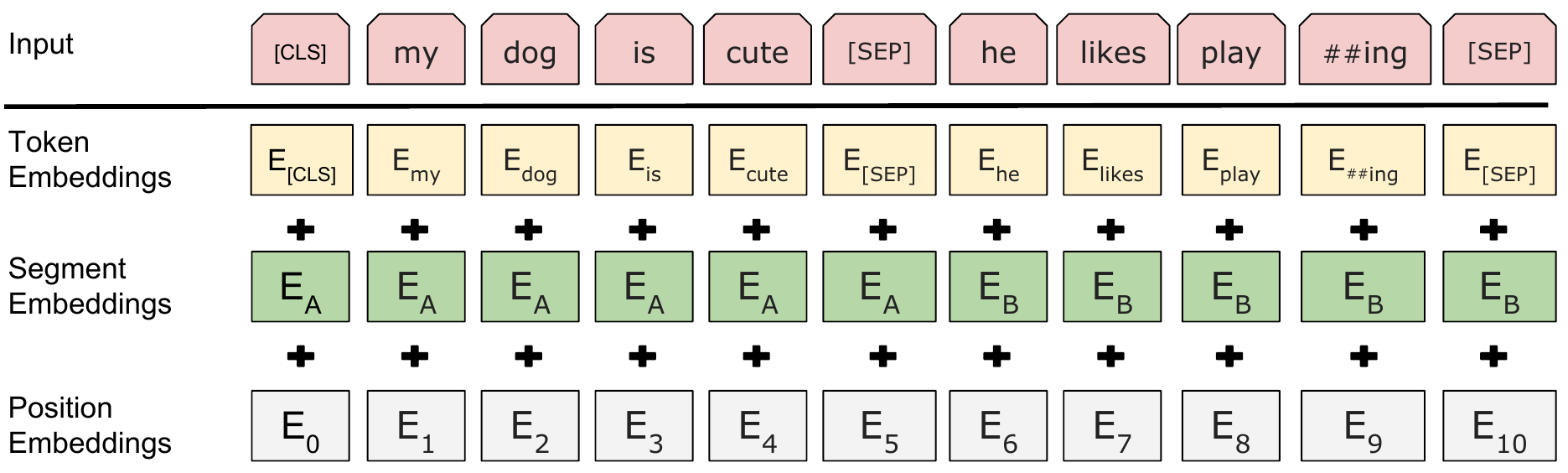

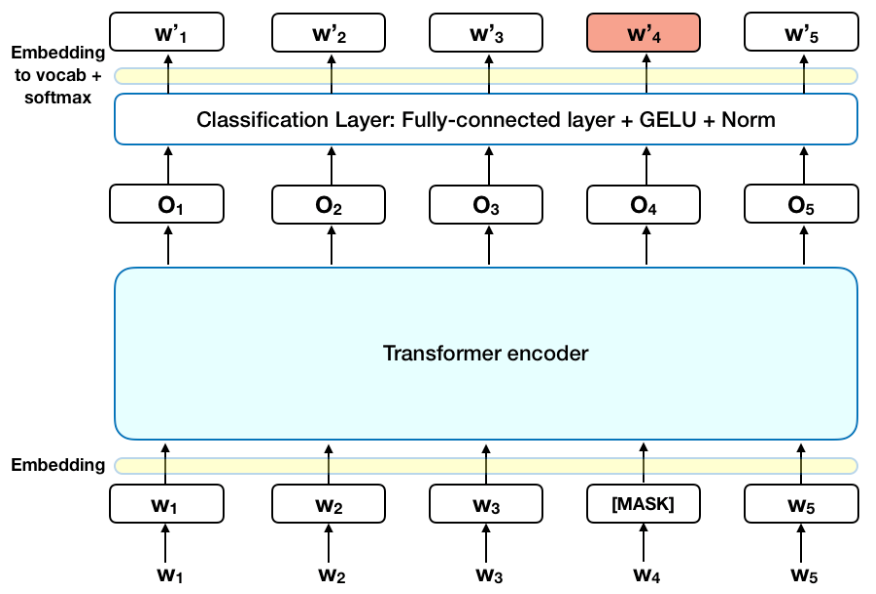

Intuitive Explanation of BERT- Bidirectional Transformers for NLP | by Renu Khandelwal | Towards Data Science

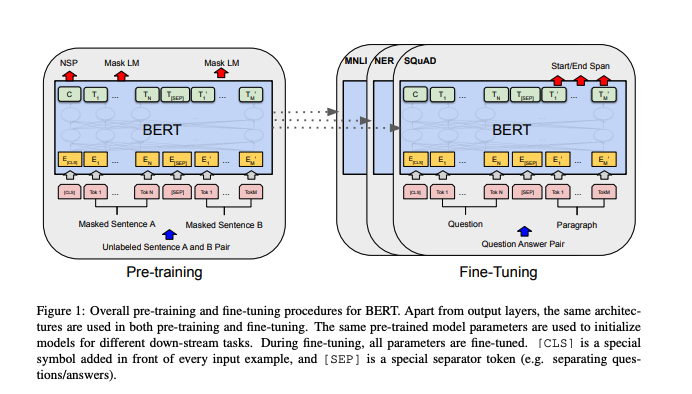

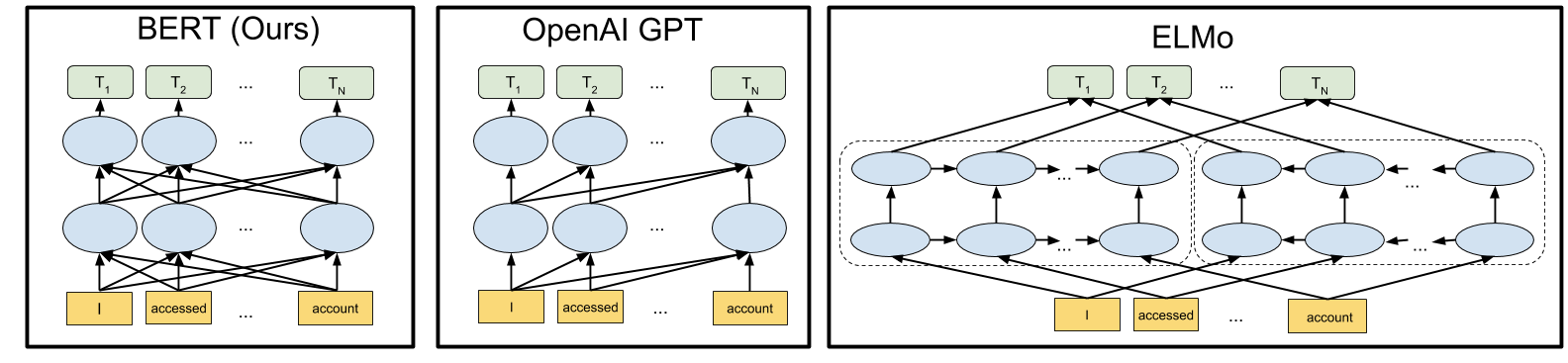

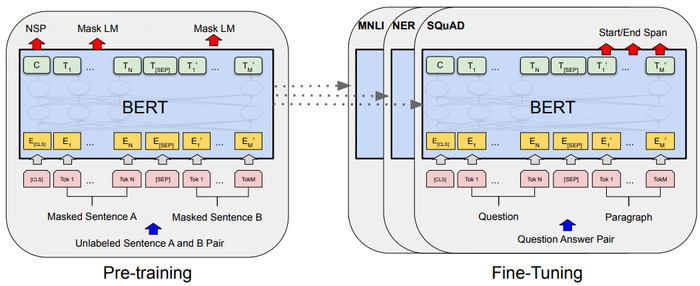

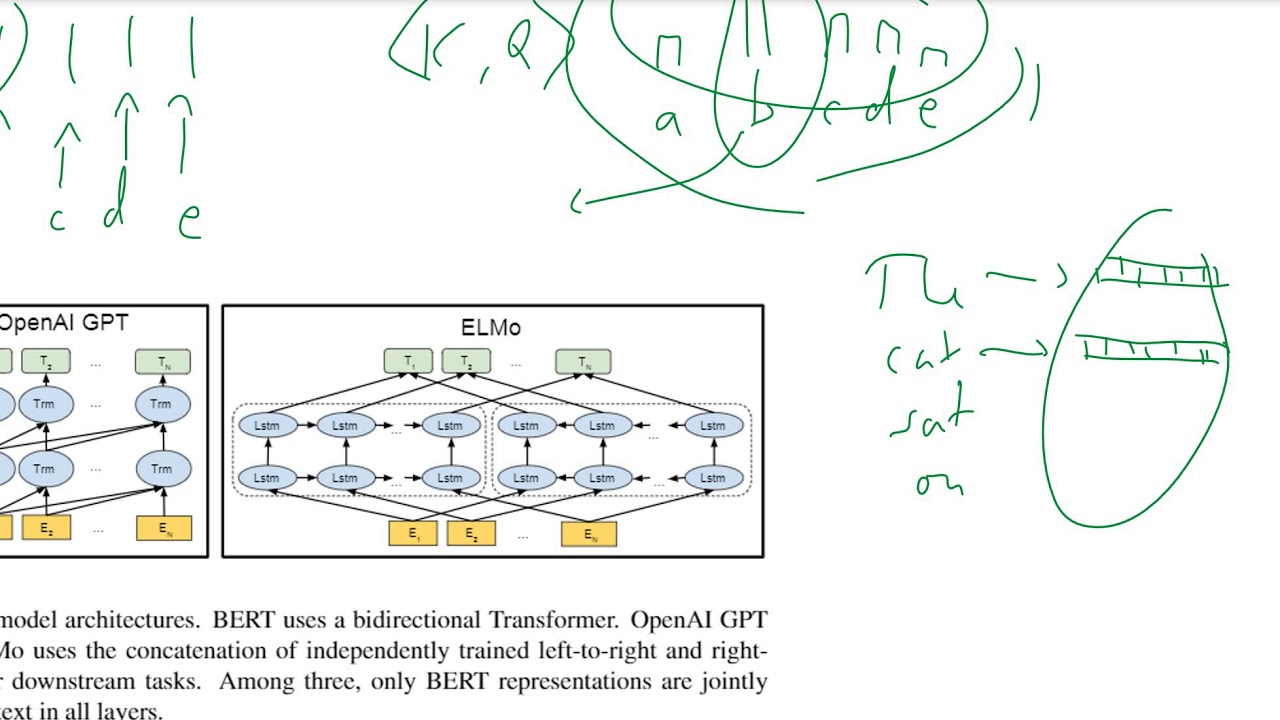

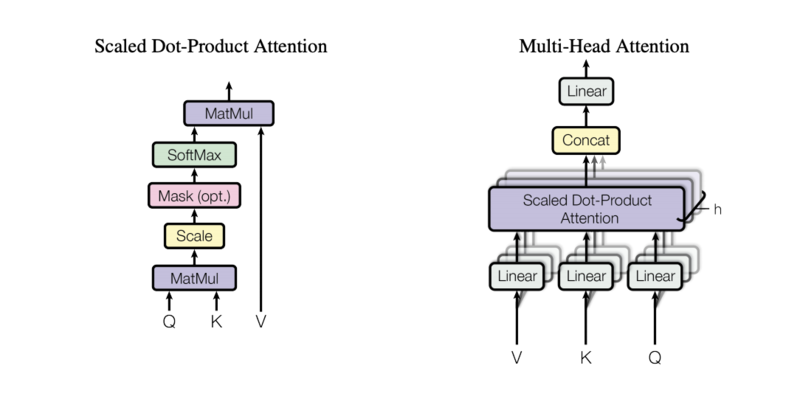

STAT946F20/BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding - statwiki

BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding on ShortScience.org

Review — BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding | by Sik-Ho Tsang | Medium

Intuitive Explanation of BERT- Bidirectional Transformers for NLP | by Renu Khandelwal | Towards Data Science

An overview of Bidirectional Encoder Representations from Transformers... | Download Scientific Diagram